Improving Link Quality

When a site reaches a large size over time, it is very easy to accumulate lots of dead links. For example, on a forum, users may include links in their posts that become obsolete over time. So over time there is an increasing number of external links to resources that:

- No Longer Exist

- Point to Parked Domains now

- Redirect to other resources, sometimes very undesirable

- Display an error page

I think there is reason to believe this can hurt with search engines. There has been plenty of talk over the last couple of years about link quality, not just quantity. Google is probably no longer computing pagerank based only on quantity. They likely have other metrics to judge the power of a link, and discount some links based on certain factors. Quality assessments may include link age, age of site, domain history, link neighborhood quality, link location, likelihood of the link being a paid ad, and surely also overall external link quality of the site.

Dead links (and similar) is something we can do something about, and thereby increase the quality of our site, not only for search engines, but also for users. I’m sure search engineers like sites that contain a higher percentage of good links, because their spiders will bring back more quality content in a shorter amount of time. And I’m sure search engineers probably don’t want to send their searchers to pages that contain bad links.

Here are a few ways you can do something about the problem:

- Use a link checking tool like Xenu’s Link Sleuth. It’s free, and well developed, allowing you to exclude certain parts of your site, and can be adjusted to to use more or less resources. I would advise setting the number of threads to about 5 when you start. Some hosts may not like the spider hitting too heavily at once, and one time I had an account temporarily suspended, so start out slow so this doesn’t happen to you.

- Sign up for an account on Google Webmaster Tools. Then validate your site, and google will begin providing you with regular reports about bad links on your site. After logging into your account, select Diagnostics, and then Web Crawl to see what google has found on your site. The main disadvantage to this tool is it only tells you about links that are bad on your site. It does not tell you about external links.

- Create a plan to do a visual look at all pages of your site over a period of time (or contract someone to do it for you). Unfortunately, sometimes there is no substitute for a human. For example, a link may now be pointing to a parked domain, and the only way you are going to know that is by seeing that for yourself.

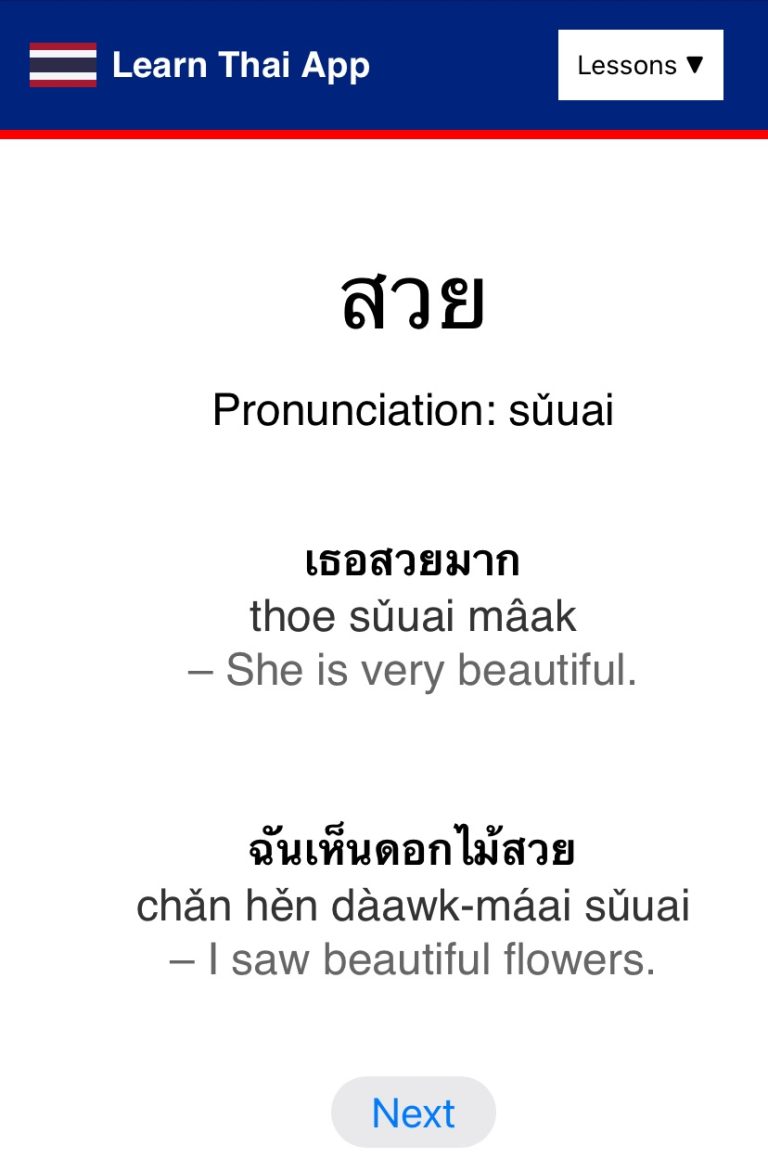

- One neat trick that you can employ to speed up the visual checking is to run a tool that creates thumbnails of web pages. We made a tool that does this for our script (phpLD). Probably their are other tools out there that will do this too. It really helped me because I could quickly see links that were showing a parked domain, because many look the same.

Improving link quality on your site is good for your users. It’s good for search engines to more easily move through your site with precision. And it makes you feel good knowing your site is of high quality. I hope some will be inspired to take the time to make some improvements to the quality of the links on their site, and hopefully in a few months we’ll see some SEO benefits as well. Good luck!